I am sure I am not the only one who ever struggled with Hadoop eclipse plugin installation. This plugin strongly depends on your environment (eclipse, ant, jdk) and hadoop distribution and version. Moreover, it only provides the Old API for MapReduce.

It is so simple to create a maven project for Hadoop that wasting time trying to build this plugin becomes totally useless. I am describing on this article how to setup a first maven hadoop project for Cloudera CDH4 on eclipse.

Prerequisite

maven 3 jdk 1.6 eclipse with m2eclipse plugin installed

Add Cloudera repository

Cloudera jar files are not available on default Maven central repository. You need to explicitly add cloudera repo in your settings.xml (under ${HOME}/.m2/settings.xml).

standard-extra-repos

true

<!-- Central Repository -->

central

http://repo1.maven.org/maven2/

true

true

<!-- Cloudera Repository -->

cloudera

https://repository.cloudera.com/artifactory/cloudera-repos

true

true

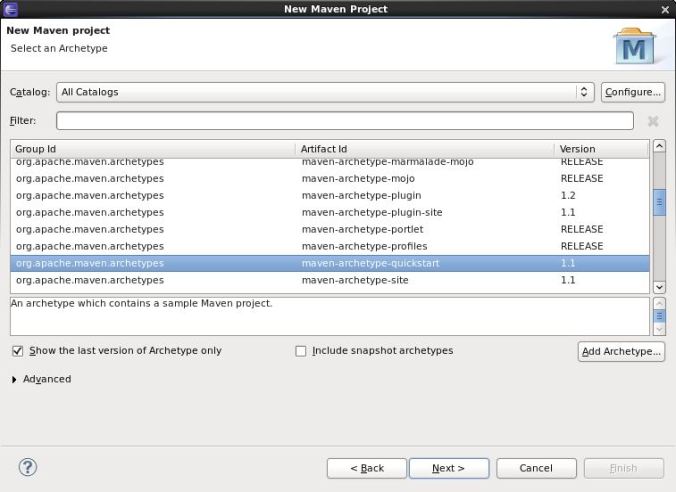

Create Maven project

On eclipse, create a new Maven project as follow

Add Hadoop Nature

For Cloudera distribution CDH4, open pom.xml file and add the following dependencies

jdk.tools jdk.tools 1.6 org.apache.hadoop hadoop-hdfs 2.0.0-cdh4.0.0 org.apache.hadoop hadoop-auth 2.0.0-cdh4.0.0 org.apache.hadoop hadoop-common 2.0.0-cdh4.0.0 org.apache.hadoop hadoop-core 2.0.0-mr1-cdh4.0.1 junit junit-dep 4.8.2 org.apache.hadoop hadoop-hdfs org.apache.hadoop hadoop-auth org.apache.hadoop hadoop-common org.apache.hadoop hadoop-core junit junit 4.10 test org.apache.maven.plugins maven-compiler-plugin 2.1 1.6 1.6

Download dependencies

Now that you have added your Cloudera repository and created your project, download dependencies. This can be easily done by right-clicking on your eclipse project, “update Maven dependencies”.

All these dependencies must have been added on your .m2 repository.

[developer@localhost ~]$ find .m2/repository/org/apache/hadoop -name "*.jar" .m2/repository/org/apache/hadoop/hadoop-tools/1.0.4/hadoop-tools-1.0.4.jar .m2/repository/org/apache/hadoop/hadoop-common/2.0.0-cdh4.0.0/hadoop-common-2.0.0-cdh4.0.0-sources.jar .m2/repository/org/apache/hadoop/hadoop-common/2.0.0-cdh4.0.0/hadoop-common-2.0.0-cdh4.0.0.jar .m2/repository/org/apache/hadoop/hadoop-core/2.0.0-mr1-cdh4.0.1/hadoop-core-2.0.0-mr1-cdh4.0.1-sources.jar .m2/repository/org/apache/hadoop/hadoop-core/2.0.0-mr1-cdh4.0.1/hadoop-core-2.0.0-mr1-cdh4.0.1.jar .m2/repository/org/apache/hadoop/hadoop-hdfs/2.0.0-cdh4.0.0/hadoop-hdfs-2.0.0-cdh4.0.0.jar .m2/repository/org/apache/hadoop/hadoop-streaming/1.0.4/hadoop-streaming-1.0.4.jar .m2/repository/org/apache/hadoop/hadoop-auth/2.0.0-cdh4.0.0/hadoop-auth-2.0.0-cdh4.0.0.jar [developer@localhost ~]$

Create WordCount example

Create your driver code

package com.aamend.hadoop.MapReduce;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

public class WordCount {

public static void main(String[] args) throws IOException,

InterruptedException, ClassNotFoundException {

Path inputPath = new Path(args[0]);

Path outputDir = new Path(args[1]);

// Create configuration

Configuration conf = new Configuration(true);

// Create job

Job job = new Job(conf, "WordCount");

job.setJarByClass(WordCountMapper.class);

// Setup MapReduce

job.setMapperClass(WordCountMapper.class);

job.setReducerClass(WordCountReducer.class);

job.setNumReduceTasks(1);

// Specify key / value

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

// Input

FileInputFormat.addInputPath(job, inputPath);

job.setInputFormatClass(TextInputFormat.class);

// Output

FileOutputFormat.setOutputPath(job, outputDir);

job.setOutputFormatClass(TextOutputFormat.class);

// Delete output if exists

FileSystem hdfs = FileSystem.get(conf);

if (hdfs.exists(outputDir))

hdfs.delete(outputDir, true);

// Execute job

int code = job.waitForCompletion(true) ? 0 : 1;

System.exit(code);

}

}

Create Mapper class

package com.aamend.hadoop.MapReduce;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class WordCountMapper extends

Mapper {

private final IntWritable ONE = new IntWritable(1);

private Text word = new Text();

public void map(Object key, Text value, Context context)

throws IOException, InterruptedException {

String[] csv = value.toString().split(",");

for (String str : csv) {

word.set(str);

context.write(word, ONE);

}

}

}

Create your Reducer class

package com.aamend.hadoop.MapReduce;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class WordCountReducer extends

Reducer {

public void reduce(Text text, Iterable values, Context context)

throws IOException, InterruptedException {

int sum = 0;

for (IntWritable value : values) {

sum += value.get();

}

context.write(text, new IntWritable(sum));

}

}

Build project

Exporting jar file is actually out of the box using maven. Execute the following command

mvn clean install

You should see same output as below

.../... [INFO] [INFO] --- maven-jar-plugin:2.3.2:jar (default-jar) @ MapReduce --- [INFO] Building jar: /home/developer/Workspace/hadoop/MapReduce/target/MapReduce-0.0.1-SNAPSHOT.jar [INFO] [INFO] --- maven-install-plugin:2.3.1:install (default-install) @ MapReduce --- [INFO] Installing /home/developer/Workspace/hadoop/MapReduce/target/MapReduce-0.0.1-SNAPSHOT.jar to /home/developer/.m2/repository/com/aamend/hadoop/MapReduce/0.0.1-SNAPSHOT/MapReduce-0.0.1-SNAPSHOT.jar [INFO] Installing /home/developer/Workspace/hadoop/MapReduce/pom.xml to /home/developer/.m2/repository/com/aamend/hadoop/MapReduce/0.0.1-SNAPSHOT/MapReduce-0.0.1-SNAPSHOT.pom [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ [INFO] Total time: 9.159s [INFO] Finished at: Sat May 25 00:35:56 GMT+02:00 2013 [INFO] Final Memory: 16M/212M [INFO] ------------------------------------------------------------------------

And your jar file must be available on project’s target directory (additionally in your ${HOME}/.m2 local repository).

This jar is ready to be executed on your Hadoop environment.

hadoop jar MapReduce-0.0.1-SNAPSHOT.jar com.aamend.hadoop.MapReduce.WordCount input output

Each time I need to create a new Hadoop project, I simply copy pom.xml template described above, and that’s it..

Pingback: sidcode's Blog | hadoop maven wordcount 예제 실행

Pingback: sidcode's Blog | hive thrift java 예제

Very nicely done Antoine. I’ll give this a shot shortly. Cheers.

Hi Antonie,

I am following the below article for ‘hadoop-1.0.3.tar.gz’ installation:

http://www.michael-noll.com/tutorials/running-hadoop-on-ubuntu-linux-single-node-cluster/

So, will “Cloudera CDH4” jar (from your article) clash with hadoop-1.0.3 while executing hadoop ?

I mean can I directly run ‘MapReduce-0.0.1-SNAPSHOT.jar’ against my installation without any hiccup, being both distribution are different (CDH4: jar Vs hadoop-1.0.3: jar) ?

Thanks

Amp

The article you were following is a really good example for getting started with Hadoop. Unfortunately, this “cloudera project” will not work against your apache distribution. That’s said, you need to update the pom.xml with the right apache dependencies

org.apache.hadoop

hadoop-core

1.2.1

org.apache.hadoop

hadoop-common

1.2.1

org.apache.hadoop

hadoop-hdfs

0.23.9

Thanks Antonie.

I will try and let you know.

Hi Antonie,

It is a nice blog. It was very useful and I was able to be ready with a simple application within a short span of time. Thank you.

But I have problems in setting up my hadoop environment. I am using windowsXp, and i downloaded hadoop 2.2.0. To check whether my hadoop environment is ready i used this in my command prompt “hadoop [–config confdir] version”. But it gives me error saying JAVA_HOME is incorreclt set. I have my java in location – “C:\Program Files\Java\jdk1.7.0\bin” . When i searched about this problem in web i came to know that i need to set the “JAVA_HOME” in hadoop-env.sh file. I tried by setting that path as “C:\Program Files\Java\jdk1.7.0\bin”. But i am getting the same problem.

Your help will be much appreciated and it will be very useful for the beginners in hadoop.

Thanks in advance,

Priya.

JAVA_HOME should be “C:\Program Files\Java\jdk1.7.0” (without /bin)

Hey,

I followed your tutorial carefully. Running under windows 8, cygwin installed and added to PATH, yet I receive the following errors:

Missing artifact org.apache.hadoop:hadoop-core:jar:2.0.0-mr1-cdh4.0.1 pom.xml /MapReduce

The container ‘Maven Dependencies’ references non existing library ‘C:\Users\shay\.m2\repository\org\apache\hadoop\hadoop-hdfs\2.0.0-cdh4.0.0\hadoop-hdfs-2.0.0-cdh4.0.0.jar’

Missing artifact org.apache.hadoop:hadoop-auth:jar:2.0.0-cdh4.0.0 pom.xml /MapReduce

Missing artifact org.apache.hadoop:hadoop-common:jar:2.0.0-cdh4.0.0 pom.xml /MapReduce

Missing artifact org.apache.hadoop:hadoop-hdfs:jar:2.0.0-cdh4.0.0 pom.xml /MapReduce

Any help will be appreciated.

Maven was not able to download Hadoop dependencies. Are you sure your ~/.m2/settings.xml is correct ? Do you have a %M2_HOME% variable set ?

M2_HOME set, but there’s no settings file under /.m2 😮

I’ll look it up, thanke a lot!

Hi Shay ,

Create .setting File Manually in .m2

Hi Antoine ,

I have been trying to get Maven to download the libs for the past 1 week , but this error wont go away. I did everything as u have described, but still Maven is not able to connect to Cloudera repo. This is Eclipse Juno in windows 7 and I have the CHD ready in a Linux machine.

ArtifactDescriptorException: Failed to read artifact descriptor for org.apache.hadoop:hadoop-hdfs:jar:2.0.0-cdh4.0.0: ArtifactResolutionException: Failure to transfer org.apache.hadoop:hadoop-hdfs:pom:2.0.0-cdh4.0.0 from https://repository.cloudera.com.artifactory/cloudera-repos was cached in the local repository, resolution will not be reattempted until the update interval of cloudera has elapsed or updates are forced. Original error: Could not transfer artifact org.apache.hadoop:hadoop-hdfs:pom:2.0.0-cdh4.0.0 from/to cloudera (https://repository.cloudera.com.artifactory/cloudera-repos): null to https://repository.cloudera.com.artifactory/cloudera-repos/org/apache/hadoop/hadoop-hdfs/2.0.0-cdh4.0.0/hadoop-hdfs-2.0.0-cdh4.0.0.pom.

Would appreciate hearing back if possible .

Thanks

Thanks I fixed it !

hi Payal, even i am facing the same issue you reported..

can you please reply on how did you fix that… thanks a lot in advance.

Regards,

Raja.

rajamahesh.aravapalli@yahoo.com

Looks like a common issue:

– Make sure ~/.m2/settings.xml exists and is correct

– Make sure ${M2_HOME} variable is set

easy to follow in steps, thanks…

Im following same instructions but I get this exception, what is wrong here?

Exception in thread “main” java.lang.ClassNotFoundException: src.main.java.com.hadoop.bi.MapReduce.MaxTemperature

at java.net.URLClassLoader$1.run(URLClassLoader.java:366)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:270)

at org.apache.hadoop.util.RunJar.main(RunJar.java:201)

run :

[root@dev MapReduce]# hadoop jar target/MapReduce-0.0.1-SNAPSHOT.jar src/main/java/com/hadoop/bi/MapReduce/MaxTemperature sample.txt /out

and this is my main class:

// cc MaxTemperature Application to find the maximum temperature in the weather dataset

// vv MaxTemperature

package com.hadoop.bi.MapReduce;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class MaxTemperature {

public static void main(String[] args) throws Exception {

if (args.length != 2) {

System.err.println(“Usage: MaxTemperature “);

System.exit(-1);

}

Configuration conf = new Configuration();

Job job = new Job(conf, “MaxTemperature”);

job.setJarByClass(MaxTemperature.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.setMapperClass(MaxTemperatureMapper.class);

job.setReducerClass(MaxTemperatureReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

// ^^ MaxTemperature

Code seems correct.. There should be an issue on how you build your jar file. Make sure MaxTemperature.class is included in jar MapReduce-0.0.1-SNAPSHOT.jar

$ jar -tf MapReduce-0.0.1-SNAPSHOT.jar | grep MaxTemperature

Check wordcount maven example on my github account : https://github.com/aamend/hadoop-mapreduce/blob/master/sandbox/src/main/java/com/aamend/hadoop/mapreduce/sandbox/WordCount.java

Thanks a lot.i have more like for your reviews.

Hadoop Training in Chennai

Pingback: Setting up Eclipse IDE for Hadoop 2.5.0 | Hadoop

I have installed Hadoop Cloudera4 in ubuntu now following these steps can I do map reduce examples myself .Thanks in advance.Please reply quikly.

Regards,

Arindam Bose

Sure you can do map reduce examples all by yourself… but what’s your question?

Just to add on I used link same link as Amp below hence I believe I got bit of the answer.Can you guide me as to proper site for learning Hadoop from scratch(map reduce and admin wise).I will give this a shot nd reply back quickly.

Thanks Again!!!

Definitive guide should be quite a good start for both Dev and Admin sides

http://shop.oreilly.com/product/0636920021773.do

Another thing I tried but am getting some error, my pom file is below:-

4.0.0

com.aamend.com

MapReduce1

0.0.1-SNAPSHOT

jar

MapReduce1

http://maven.apache.org

UTF-8

junit

junit

3.8.1

test

jdk.tools

jdk.tools

1.6

org.apache.hadoop

hadoop-hdfs

2.0.0-cdh4.0.0

org.apache.hadoop

hadoop-auth

2.0.0-cdh4.0.0

org.apache.hadoop

hadoop-common

2.0.0-cdh4.0.0

org.apache.hadoop

hadoop-core

2.0.0-mr1-cdh4.0.1

junit

junit-dep

4.8.2

org.apache.hadoop

hadoop-hdfs

org.apache.hadoop

hadoop-auth

org.apache.hadoop

hadoop-common

org.apache.hadoop

hadoop-core

junit

junit

4.10

test

org.apache.maven.plugins

maven-compiler-plugin

2.1

1.6

1.6

plugins tag is throwing error :(. Then I need to do right click—>Maven—->Update Project (I am using Juno and Maven3 as per your advise).Please help

What’s the error ?? Please elaborate

Getting this error, what could be the issue.

$ hadoop jar /tmp/MapReduce-0.0.1-SNAPSHOT.jar com.chan.hadoop.MapReduce.WordCount input output

15/10/20 23:18:36 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

15/10/20 23:18:38 INFO Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id

15/10/20 23:18:38 INFO jvm.JvmMetrics: Initializing JVM Metrics with processName=JobTracker, sessionId=

15/10/20 23:18:39 WARN mapreduce.JobSubmitter: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

15/10/20 23:18:39 INFO mapreduce.JobSubmitter: Cleaning up the staging area file:/tmp/hadoop-a_campsb/mapred/staging/a_campsb678466908/.staging/job_local678466908_0001

Exception in thread “main” org.apache.hadoop.mapreduce.lib.input.InvalidInputException: Input path does not exist: hdfs://fastdevl0543.svr.emea.jpmchase.net:9000/user/a_campsb/input

at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.singleThreadedListStatus(FileInputFormat.java:321)

at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.listStatus(FileInputFormat.java:264)

at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.getSplits(FileInputFormat.java:385)

at org.apache.hadoop.mapreduce.JobSubmitter.writeNewSplits(JobSubmitter.java:597)

at org.apache.hadoop.mapreduce.JobSubmitter.writeSplits(JobSubmitter.java:614)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:492)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1296)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1293)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Unknown Source)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1628)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1293)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1314)

at com.chan.hadoop.MapReduce.WordCount.main(WordCount.java:54)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(Unknown Source)

at java.lang.reflect.Method.invoke(Unknown Source)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Make sure your input path does exist on HDFS

PS: Have fun at JPMorgan !

Thanks so much Antoine! This tutorial was super easy to follow.