I’m describing here how I set SQL Developer to connect / query my Spark cluster. I made it work on my local environment below:

- Ubuntu precise 64 bits (1 master, 2 slaves)

- Hadoop Hortonworks 2.4.0.2.1.5.0-695

- Hive version 0.13.0.2.1.5.0-695, metastore hosted on MySQL database

- Spark 1.1.0 prebuilt for Hadoop 2.4

- SQL Developer 4.0.3.16

Note that I’ve successfully tested same setup on a 20 nodes cluster on AWS (EMR)

Preparing your Spark environment

First of all, you will have to make sure Spark SQL is able to connect Hive metastore. Simply copy hive-site.xml file in Spark conf directory

sudo cp /etc/hive/conf/hive-site.xml /usr/lib/spark/conf/

Open a hive shell, and create a dummy table

hive> CREATE TABLE dummy (foo STRING, bar STRING); OK Time taken: 2.555 seconds

Now open a spark-sql shell, and make sure you can find the newly created table. Note that –master local can be used here since we’re not running any job.

vagrant@vagrant:~$ /usr/lib/spark/bin/spark-sql --master local ../.. spark-sql> show tables; ../.. dummy ../.. Time taken: 3.452 seconds ../..

Great, looks like Spark-SQL is now connected to Hive metastore…

Install Hive JDBC drivers on SQL Developer

SQL Developer is quite a nice tool – at least for Oracle databases. In order to make it work with Hive / Spark, we need to download the Hive connectors first. I found them on Cloudera website. Note that I was not able to find same from Hortonworks. This could be an issue if I was using Hive natively, but it worth giving a try on Spark first.

Unzip the downloaded archive (I’m using JDBC4 package), and open SQLDeveloper.

You will have to add third-part connectors from preferences -> database -> Third party JDBC driver. Add all the jars included in your archive

~/Downloads/Cloudera_HiveJDBC_2.5.4.1006/Cloudera_HiveJDBC4_2.5.4.1006/libthrift-0.9.0.jar ~/Downloads/Cloudera_HiveJDBC_2.5.4.1006/Cloudera_HiveJDBC4_2.5.4.1006/HiveJDBC4.jar ~/Downloads/Cloudera_HiveJDBC_2.5.4.1006/Cloudera_HiveJDBC4_2.5.4.1006/log4j-1.2.14.jar ~/Downloads/Cloudera_HiveJDBC_2.5.4.1006/Cloudera_HiveJDBC4_2.5.4.1006/TCLIServiceClient.jar ~/Downloads/Cloudera_HiveJDBC_2.5.4.1006/Cloudera_HiveJDBC4_2.5.4.1006/ql.jar ~/Downloads/Cloudera_HiveJDBC_2.5.4.1006/Cloudera_HiveJDBC4_2.5.4.1006/hive_metastore.jar ~/Downloads/Cloudera_HiveJDBC_2.5.4.1006/Cloudera_HiveJDBC4_2.5.4.1006/slf4j-api-1.5.8.jar ~/Downloads/Cloudera_HiveJDBC_2.5.4.1006/Cloudera_HiveJDBC4_2.5.4.1006/hive_service.jar ~/Downloads/Cloudera_HiveJDBC_2.5.4.1006/Cloudera_HiveJDBC4_2.5.4.1006/slf4j-log4j12-1.5.8.jar ~/Downloads/Cloudera_HiveJDBC_2.5.4.1006/Cloudera_HiveJDBC4_2.5.4.1006/libfb303-0.9.0.jar

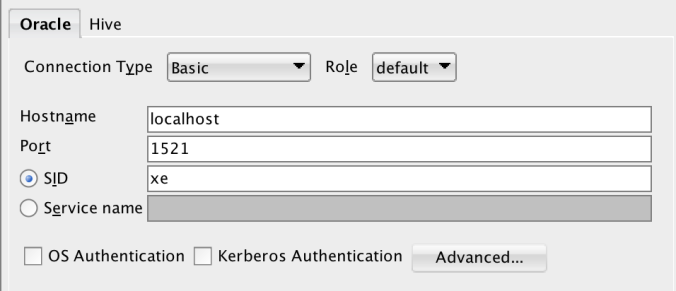

Once added, restart SQL Developer, and ohhh, awesome, a new tab “Hive” magically appeared 🙂

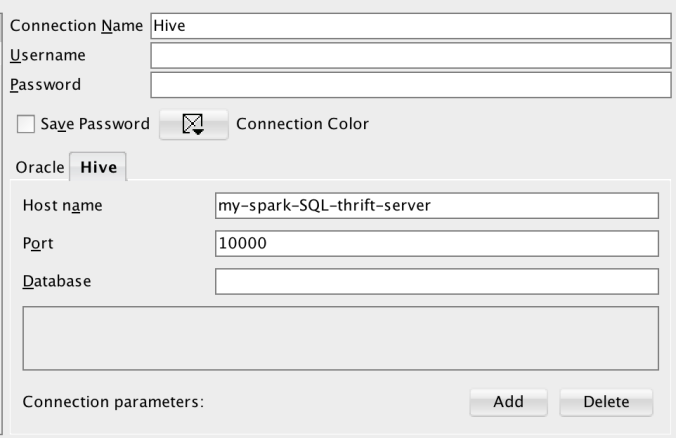

Open this tab and create a new connection to your HiveServer2 as follows

And test connection ! Assuming HiveServer2 / Hive Metastore is running, your connection should be established and a new Hive Worksheet should pop up. At this stage, connection From SQL Developer to Hive is up, but SQL are executed on Hive, not Spark !

Now we need to switch back to Spark !

The goal is now to use Spark thrift interface in lieu of vanilla HiveServer2. Stop HiveServer2 daemon, and start Spark thrift interface as follows..

vagrant@vagrant:~/usr/lib/spark/sbin/start-thriftserver.sh --master yarn-client Spark assembly has been built with Hive, including Datanucleus jars on classpath ../.. 14/10/25 15:11:49 INFO service.AbstractService: Service:OperationManager is inited. 14/10/25 15:11:49 INFO service.AbstractService: Service: SessionManager is inited. 14/10/25 15:11:49 INFO service.AbstractService: Service: CLIService is inited. 14/10/25 15:11:49 INFO service.AbstractService: Service:ThriftBinaryCLIService is inited. 14/10/25 15:11:49 INFO service.AbstractService: Service: HiveServer2 is inited. 14/10/25 15:11:49 INFO service.AbstractService: Service:OperationManager is started. 14/10/25 15:11:49 INFO service.AbstractService: Service:SessionManager is started. 14/10/25 15:11:49 INFO service.AbstractService: Service:CLIService is started. 14/10/25 15:11:49 INFO hive.metastore: Trying to connect to metastore with URI thrift://192.168.56.101:9083 14/10/25 15:11:49 INFO hive.metastore: Waiting 1 seconds before next connection attempt. 14/10/25 15:11:50 INFO hive.metastore: Connected to metastore. 14/10/25 15:11:50 INFO service.AbstractService: Service:ThriftBinaryCLIService is started. 14/10/25 15:11:50 INFO service.AbstractService: Service:HiveServer2 is started. 14/10/25 15:11:50 INFO thriftserver.HiveThriftServer2: HiveThriftServer2 started 14/10/25 15:11:50 INFO thrift.ThriftCLIService: ThriftBinaryCLIService listening on 0.0.0.0/0.0.0.0:10000 ../..

Looking at application master log file, Spark is actually embedding its own HiveServer2 into a Spark job. Connection will be apparently dropped if Spark job ends up, so make sure Spark job is not killed !

I’m using yarn-client to handle my JDBC connections, but this should support spark standalone clusters as well

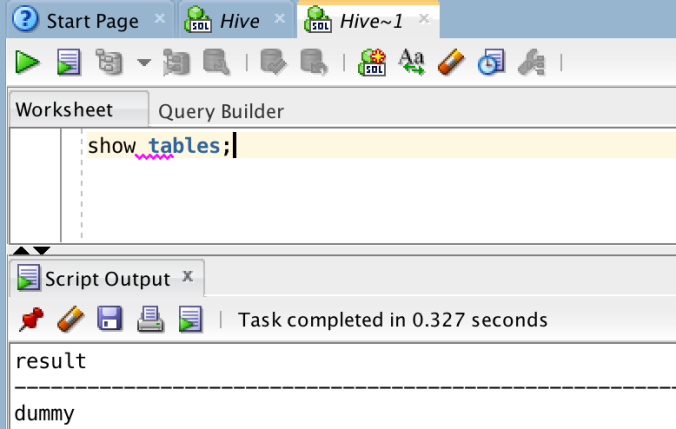

Now get back to your SQL Developer, and restore connection to Hive (HiveServer is now embedded into a Spark job), and you’re now connected to Spark !

And voila !

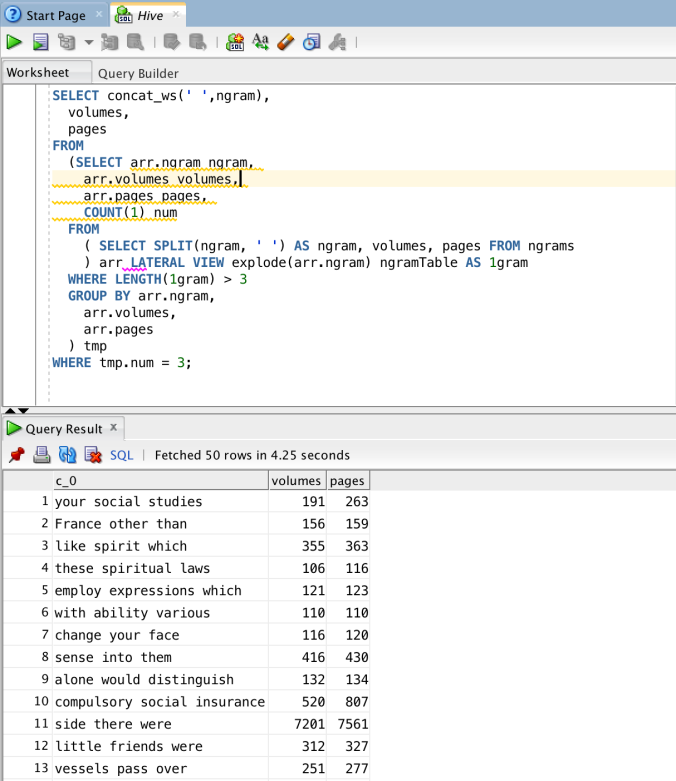

For testing purpose, I’ve downloaded some ngrams data from S3 (link), and created a new Table ngrams on Hive. I’m now able to get lightning fast SQL queries on Spark from a user friendly environment (SQL Developer).

As initially said, this seems quite hacky (Remember I used Cloudera JDBC on Hortonworks environment), but it seems to work, and it seems to be really fast ! Please let me know the environment / distribution you’re using if you’re facing any issue..

Cheers!

Antoine

Pingback: Spark: Connect Tableau Desktop to SparkSQL | Hadoopi

Hello.

I’m curious your action is still work on AWS EMR 3.10.0.

I’ve created Spark SQL thrift server on Amazon 2.4.0 hadoop distribution including Pig, Spark, Hive. But JDBC connection is failed using your solution.

Should be working. Did not test it though.

Also make sure port is open (ssh tunneling if not)

Hi,

I am using Hive JDBC driver provided by Cloudera to access Hive tables.

[http://www.cloudera.com/content/www/en-us/downloads/connectors/hive/jdbc/2-5-4.html]

static String JDBC_DRIVER = “com.cloudera.hive.jdbc41.HS2Driver”;

// Define a string as the connection URL

private static final String CONNECTION_URL = “jdbc:hive2://localhost:10000/default”;

public static void main(String[] args) throws Exception {

Connection con = null;

Statement stmt = null;

ResultSet rs = null;

// Define a plain query

String query = “SELECT name FROM employees”;

// Define a parametrized query

try {

// Register the driver using the class name

Class.forName(JDBC_DRIVER);

// Establish a connection using the connection

// URL

con = DriverManager.getConnection(CONNECTION_URL,”hive”,””);

// Create a Statement object for sending SQL

// statements to the database

stmt = con.createStatement();

// Execute the SQL statement

rs = stmt.executeQuery(query);

If I use HiveServer2 , the above program works fine .

Now I want to run the SQL against Spark ,so I stop/kill HiveServer2 and start Spark thrift server but the program fails with following exception.

Please note that if I connect using beeline ,it works fine. Any idea what am I missing here.

/scratch/xyz/view_storage/xyz_bam_spark_Nov/jdk8u40/bin/java -server -classpath /scratch/xyz/spark-examples-master/first-example/SparkApp/.adf:/scratch/xyz/spark-examples-master/first-example/SparkApp/first-example/classes:/home/xyz/clouder_jdbc/jars/HiveJDBC41.jar:/home/xyz/clouder_jdbc/jars/libthrift-0.9.0.jar:/home/xyz/clouder_jdbc/jars/hive_metastore.jar:/home/xyz/clouder_jdbc/jars/hive_service.jar:/home/xyz/clouder_jdbc/jars/libfb303-0.9.0.jar:/home/xyz/clouder_jdbc/jars/log4j-1.2.14.jar:/home/xyz/clouder_jdbc/jars/ql.jar:/home/xyz/clouder_jdbc/jars/slf4j-api-1.5.11.jar:/home/xyz/clouder_jdbc/jars/slf4j-log4j12-1.5.11.jar:/home/xyz/clouder_jdbc/jars/TCLIServiceClient.jar:/home/xyz/clouder_jdbc/jars/zookeeper-3.4.6.jar -Djavax.net.ssl.trustStore=/tmp/trustStore291984617787443166.jks org.sparkexample.ClouderaJDBCHiveExample

log4j:WARN No appenders could be found for logger (org.apache.thrift.transport.TSaslTransport).

log4j:WARN Please initialize the log4j system properly.

java.sql.SQLException: [Cloudera][HiveJDBCDriver](500151) Error setting/closing session: Open Session Error.

at com.cloudera.hive.hivecommon.api.ExtendedHS2Client.openSession(Unknown Source)

at com.cloudera.hive.hivecommon.api.HS2Client.(Unknown Source)

at com.cloudera.hive.hivecommon.api.ExtendedHS2Client.(Unknown Source)

at com.cloudera.hive.hivecommon.api.ZooKeeperEnabledExtendedHS2Factory.createClient(Unknown Source)

at com.cloudera.hive.hivecommon.core.HiveJDBCCommonConnection.connect(Unknown Source)

at com.cloudera.hive.hive.core.HiveJDBCConnection.connect(Unknown Source)

at com.cloudera.hive.jdbc.common.BaseConnectionFactory.doConnect(Unknown Source)

at com.cloudera.hive.jdbc.common.AbstractDriver.connect(Unknown Source)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:247)

Caused by: com.cloudera.hive.support.exceptions.GeneralException: [Cloudera][HiveJDBCDriver](500151) Error setting/closing session: Open Session Error.

… 10 more

Caused by: com.cloudera.hive.support.exceptions.GeneralException: CONN_SESSION_ERR

… 10 more

Process exited with exit code 0.

Did you ever figure it out? This comment is the only search result for ‘Error setting/closing session: Open Session Error.’ and I hit the same error connecting to HIVE (1.0.0; emr-4.6.0) from R.

Hi, have you resolved your problem? I met the same issue. If you have the answer ,please tell me . Great gratitude!!

Hi,

Have you resolved the issue?

I met the the same problem.

Great gratitude for your reply.

My email : guicooperate@163.com

HiveSQLClient is a free graphical standalone Java program that will allow you to connect to Kerberised (Kerberos enabled) Hive Cluster for managing Hive objects. Most of the client applications require MIT Kerberos client which needs an additional step to log in and renew to obtain a new Kerberos ticket.

A simple and lightweight program which doesn’t require MIT Kerberos client to be installed on your machine and will get a Kerberos ticket automatically when you log in.

Future HiveSQLClient version will support more authentication mechanisms.

This tool uses JDBC to connect to the hadoop cluster.

Please visit below website for more details.

https://hivesqlclient.github.io/site/

it worked for me, thanks

But, when I do select col1, col2 from table, it only shows data for col1